Technical specifications

Login node

One login node providing virtual desktop for visualisation, shared campaign storage, Secure Shell (SSH) capabilities for users and a Slurm workload manager:

- 48 Cores (24 x 2 AMD Milan processors)

- 128 GB Memory (8GB x 16)

- ~10.7 TB of shared storage space

- GPU Card for virtual desktop (Nvidia Ampere A40)

Compute nodes

Eight compute nodes providing 384 cores, each with:

- 48 Cores (24 x 2 AMD Milan processors)

- 256 GB Memory (16GB x 16), 5.3GBs per core

- 960 GB NVMe based fast local storage

Software environment

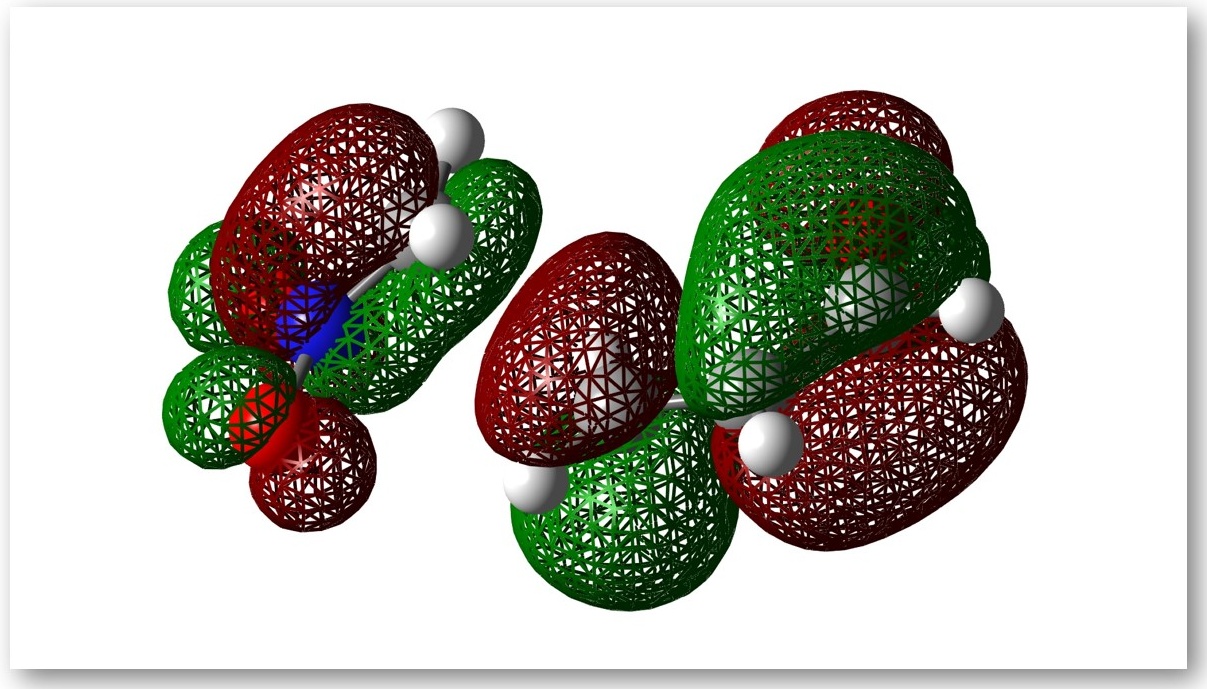

- Gaussian 09, Gaussian 16 and GaussView

- RedHat Enterprise Linux v8

- SLURM scheduler

- Module-based software environments

- Intel and GNU C and Fortran compilers

- Intel and OpenSource Math libraries and MPI libraries

- Intel debugger and profiling tools

Use Anatra for your research

Anatra is now available for use by researcher and postgraduate students at the University. The HTC cluster is earmarked for software applications that can't move to the cloud, such as Gaussian and GaussView.

Request an Anatra project account if you would like to use the cluster for your research.

View the step-by-step training material from our one hour Anatra onboarding workshops.

Anatra forms part of the University's new three pillar research computing infrastructure, which also includes:

- Bath's powerful cloud high performance computing (HPC) environment within Microsoft Azure, and

- The GW4 Isambard HPC service

Acknowledging Research Computing

Research Computing provides state-of-the-art high performance computing and research software engineering support to the academic community, significantly impacting the quality of the resultant research output.

Find out how to acknowledge us in your publications.